k8s多集群配置管理平台

临时集群特性

模拟生产环境

整体环境说明

内网:10.17.1.44

[root@localhost account-server]# kubectl get nodesNAME STATUS ROLES AGE VERSIONlocalhost Ready master 25h v1.17.5[root@localhost account-server]# kubectl get pods -ANAMESPACE NAME READY STATUS RESTARTS AGEcattle-system cattle-cluster-agent-689f8dcc64-7slpk 1/1 Running 0 78mcattle-system cattle-node-agent-7lndv 1/1 Running 0 78mingress-nginx nginx-ingress-controller-74879f74c6-jdzx9 1/1 Running 0 24hkong ingress-kong-d7b5d68f4-j6tsx 2/2 Running 2 25hkube-system calico-kube-controllers-69cb4d4df7-447m5 1/1 Running 0 25hkube-system calico-node-7cb2w 1/1 Running 0 25hkube-system coredns-9d85f5447-x5l4c 1/1 Running 0 25hkube-system coredns-9d85f5447-zqgh7 1/1 Running 0 25hkube-system etcd-localhost 1/1 Running 0 25hkube-system kube-apiserver-localhost 1/1 Running 0 25hkube-system kube-controller-manager-localhost 1/1 Running 0 25hkube-system kube-proxy-gdtdt 1/1 Running 0 25hkube-system kube-scheduler-localhost 1/1 Running 0 25hkube-system metrics-server-6fcdc7bddc-ggsnm 1/1 Running 0 25hmyaliyun: 47.1x6.x9.1x6

rancher 部署于此节点

[root@zisefeizhu ~]# docker run -d --restart=unless-stopped \ -p 80:80 -p 443:443 \ rancher/rancher:latest[root@zisefeizhu ~]# free -h total used free shared buff/cache availableMem: 3.7G 1.1G 320M 640K 2.3G 2.3GSwap: 0B 0B 0B[root@zisefeizhu ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES87aeb7e4a262 rancher/rancher:latest "entrypoint.sh" 2 hours ago Up 2 hours 0.0.0.0:80->80/tcp, 0.0.0.0:443->443/tcp angry_visvesvaraya临时环境(模拟生产):如上图

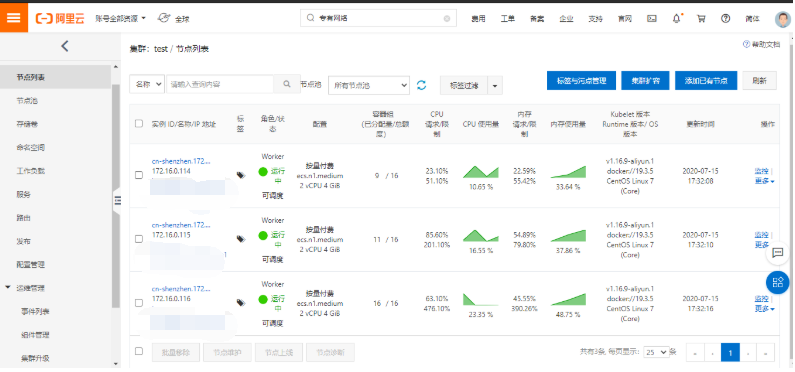

[root@mobanji .kube]# kubectl get pods -ANAMESPACE NAME READY STATUS RESTARTS AGEarms-prom arms-prometheus-ack-arms-prometheus-7b4d578f86-ff824 1/1 Running 0 9m26sarms-prom kube-state-metrics-864b9c5857-ljb6l 1/1 Running 0 9m42sarms-prom node-exporter-6ttg5 2/2 Running 0 9m42sarms-prom node-exporter-rz7rr 2/2 Running 0 9m42sarms-prom node-exporter-t45zj 2/2 Running 0 9m42skube-system ack-node-problem-detector-daemonset-2r9bg 1/1 Running 0 9m14skube-system ack-node-problem-detector-daemonset-mjk4k 1/1 Running 0 9m15skube-system ack-node-problem-detector-daemonset-t94p4 1/1 Running 0 9m13skube-system ack-node-problem-detector-eventer-6cb57ffb68-gl5n5 1/1 Running 0 9m44skube-system alibaba-log-controller-795d7486f8-jrcv2 1/1 Running 2 9m45skube-system alicloud-application-controller-bc84954fb-z5dq9 1/1 Running 0 9m45skube-system alicloud-monitor-controller-7c8c5485f4-xdrdd 1/1 Running 0 9m45skube-system aliyun-acr-credential-helper-59b59477df-p7vxc 1/1 Running 0 9m46skube-system coredns-79989b94b6-47vll 1/1 Running 0 9m46skube-system coredns-79989b94b6-dqpjm 1/1 Running 0 9m46skube-system csi-plugin-2kjc9 9/9 Running 0 9m45skube-system csi-plugin-5lt7b 9/9 Running 0 9m45skube-system csi-plugin-px52m 9/9 Running 0 9m45skube-system csi-provisioner-56d7fb44b6-6czqx 8/8 Running 0 9m45skube-system csi-provisioner-56d7fb44b6-jqsrt 8/8 Running 1 9m45skube-system kube-eventer-init-mv2vj 0/1 Completed 0 9m44skube-system kube-flannel-ds-4xbhr 1/1 Running 0 9m46skube-system kube-flannel-ds-mfg7m 1/1 Running 0 9m46skube-system kube-flannel-ds-wnrp2 1/1 Running 0 9m46skube-system kube-proxy-worker-44tkt 1/1 Running 0 9m45skube-system kube-proxy-worker-nw2g6 1/1 Running 0 9m45skube-system kube-proxy-worker-s78vl 1/1 Running 0 9m45skube-system logtail-ds-67lkm 1/1 Running 0 9m45skube-system logtail-ds-68fdz 1/1 Running 0 9m45skube-system logtail-ds-bkntm 1/1 Running 0 9m45skube-system metrics-server-f88f9b8d8-l9zl4 1/1 Running 0 9m46skube-system nginx-ingress-controller-758cdc676c-k8ffj 1/1 Running 0 9m46skube-system nginx-ingress-controller-758cdc676c-pxzxd 1/1 Running 0 9m46s测试点

可行性验证

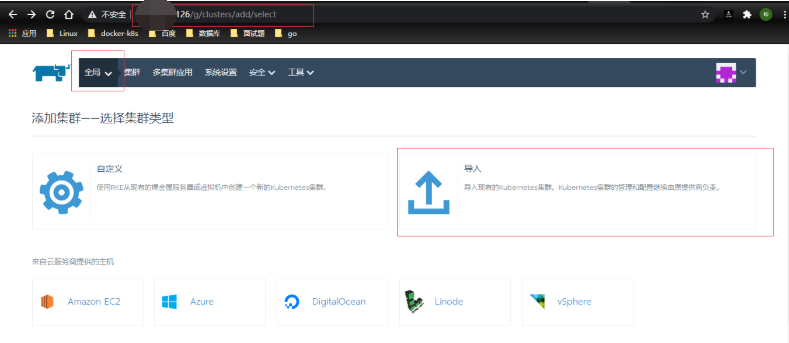

在临时环境上部署rancher 并同时管控临时环境和内网环境

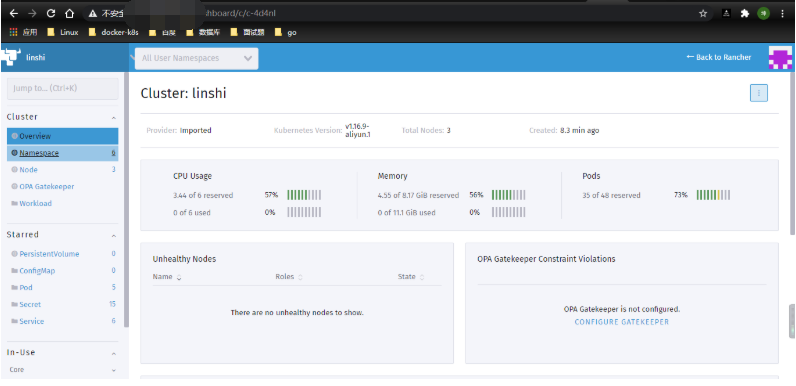

🆗!暂rancher管控两个k8s集群成功。说明rancher可以管理多k8s集群

临时环境

内网环境

可行性验证到此完毕

可预测性问题验证

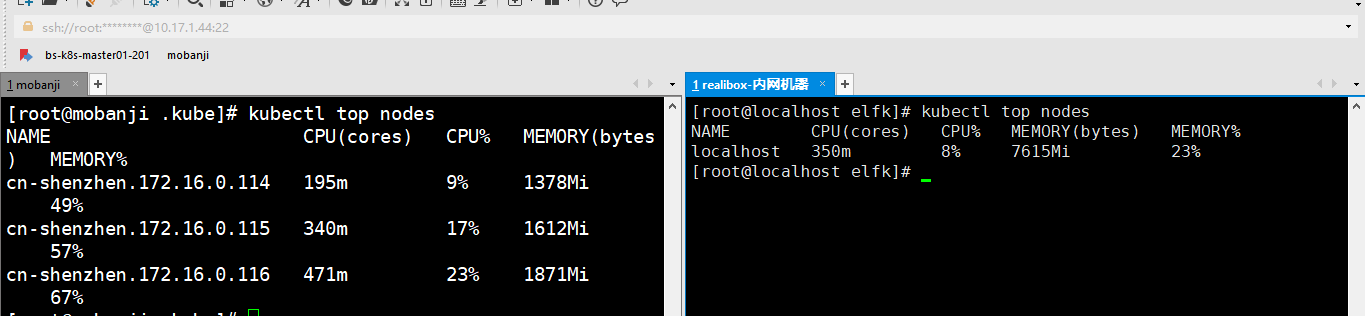

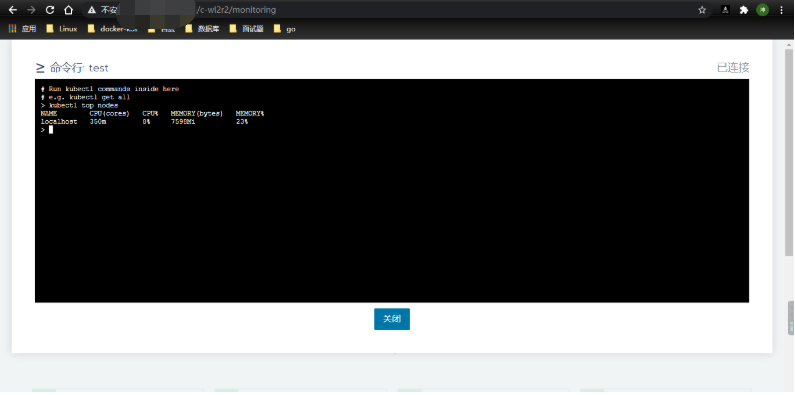

测试rancher web 和k8s本端是否可以同时进行操作

k8s集群本端正常。

rancher web 正常。

这说明 rancher web 可以和k8s本端同时进行管控操作

删除myaliyun的rancher然后再起一个rancher 看能否管控

[root@zisefeizhu ~]# docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES87aeb7e4a262 rancher/rancher:latest "entrypoint.sh" 2 hours ago Up 2 hours 0.0.0.0:80->80/tcp, 0.0.0.0:443->443/tcp angry_visvesvaraya[root@zisefeizhu ~]# docker container rm -f 87aeb7e4a26287aeb7e4a262观察k8s集群[root@mobanji .kube]# kubectl get pods -A -wNAMESPACE NAME READY STATUS RESTARTS AGEarms-prom arms-prometheus-ack-arms-prometheus-7b4d578f86-ff824 1/1 Running 0 22marms-prom kube-state-metrics-864b9c5857-ljb6l 1/1 Running 0 22marms-prom node-exporter-6ttg5 2/2 Running 0 22marms-prom node-exporter-rz7rr 2/2 Running 0 22marms-prom node-exporter-t45zj 2/2 Running 0 22mcattle-system cattle-cluster-agent-7d99b645d9-s5gqh 1/1 Running 0 16mcattle-system cattle-node-agent-9s4tw 0/1 Pending 0 16mcattle-system cattle-node-agent-m8rhk 1/1 Running 0 17mcattle-system cattle-node-agent-nkl5m 1/1 Running 0 17mkube-system ack-node-problem-detector-daemonset-2r9bg 1/1 Running 0 22mkube-system ack-node-problem-detector-daemonset-mjk4k 1/1 Running 0 22mkube-system ack-node-problem-detector-daemonset-t94p4 1/1 Running 0 22mkube-system ack-node-problem-detector-eventer-6cb57ffb68-gl5n5 1/1 Running 0 22mkube-system alibaba-log-controller-795d7486f8-jrcv2 1/1 Running 2 22mkube-system alicloud-application-controller-bc84954fb-z5dq9 1/1 Running 0 22mkube-system alicloud-monitor-controller-7c8c5485f4-xdrdd 1/1 Running 0 22mkube-system aliyun-acr-credential-helper-59b59477df-p7vxc 1/1 Running 0 23mkube-system coredns-79989b94b6-47vll 1/1 Running 0 23mkube-system coredns-79989b94b6-dqpjm 1/1 Running 0 23mkube-system csi-plugin-2kjc9 9/9 Running 0 22mkube-system csi-plugin-5lt7b 9/9 Running 0 22mkube-system csi-plugin-px52m 9/9 Running 0 22mkube-system csi-provisioner-56d7fb44b6-6czqx 8/8 Running 0 22mkube-system csi-provisioner-56d7fb44b6-jqsrt 8/8 Running 1 22mkube-system kube-eventer-init-mv2vj 0/1 Completed 0 22mkube-system kube-flannel-ds-4xbhr 1/1 Running 0 23mkube-system kube-flannel-ds-mfg7m 1/1 Running 0 23mkube-system kube-flannel-ds-wnrp2 1/1 Running 0 23mkube-system kube-proxy-worker-44tkt 1/1 Running 0 22mkube-system kube-proxy-worker-nw2g6 1/1 Running 0 22mkube-system kube-proxy-worker-s78vl 1/1 Running 0 22mkube-system logtail-ds-67lkm 1/1 Running 0 22mkube-system logtail-ds-68fdz 1/1 Running 0 22mkube-system logtail-ds-bkntm 1/1 Running 0 22mkube-system metrics-server-f88f9b8d8-l9zl4 1/1 Running 0 23mkube-system nginx-ingress-controller-758cdc676c-k8ffj 1/1 Running 0 23mkube-system nginx-ingress-controller-758cdc676c-pxzxd 1/1 Running 0 23m临时环境和内网环境 k8s 集群都没发生改变

[root@zisefeizhu ~]# docker run -d --restart=unless-stopped \> -p 80:80 -p 443:443 \> rancher/rancher:latestbdc79c85e179ab9bb481a391029ac7e03a19252ede71860992971e90f6e871bd

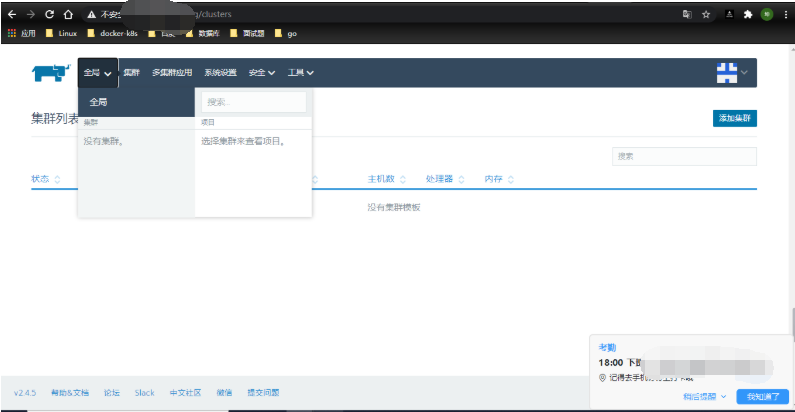

rancher web k8s集群丢失,再次添加

[root@mobanji .kube]# curl --insecure -sfL https://47.1xx.x9.1x6/v3/import/tgnmhhj6n2s5tc4prw9ft4pnd87w6sfw54487lrj2k5rkd26zwg8.yaml | kubectl apply -f -clusterrole.rbac.authorization.k8s.io/proxy-clusterrole-kubeapiserver unchangedclusterrolebinding.rbac.authorization.k8s.io/proxy-role-binding-kubernetes-master unchangednamespace/cattle-system unchangedserviceaccount/cattle unchangedclusterrolebinding.rbac.authorization.k8s.io/cattle-admin-binding unchangedsecret/cattle-credentials-e50bc99 createdclusterrole.rbac.authorization.k8s.io/cattle-admin unchangeddeployment.apps/cattle-cluster-agent configureddaemonset.apps/cattle-node-agent configured

这证明rancher意外宕机对k8s集群没有影响

能否两个rancher同时管控

在内网环境部署rancher

注:因为临时环境无法访问内网,所以此问题只能在内网环境测试

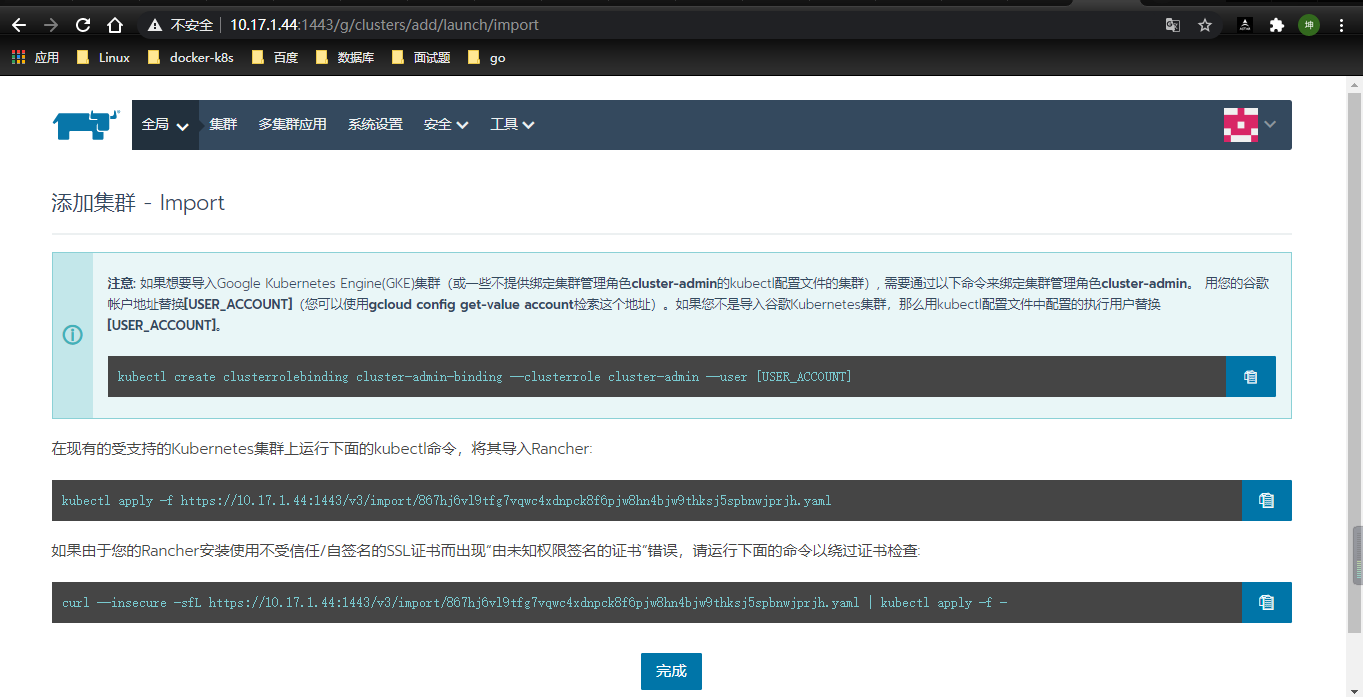

目前内网环境正加入在临时环境部署的rancher中,现在直接在内网环境的rancher中加入内网k8s

[root@localhost elfk]# curl --insecure -sfL https://10.17.1.44:1443/v3/import/867hj6vl9tfg7vqwc4xdnpck8f6pjw8hn4bjw9thksj5spbnwjprjh.yaml | kubectl apply -f -clusterrole.rbac.authorization.k8s.io/proxy-clusterrole-kubeapiserver unchangedclusterrolebinding.rbac.authorization.k8s.io/proxy-role-binding-kubernetes-master unchangednamespace/cattle-system unchangedserviceaccount/cattle unchangedclusterrolebinding.rbac.authorization.k8s.io/cattle-admin-binding unchangedsecret/cattle-credentials-6375cce createdclusterrole.rbac.authorization.k8s.io/cattle-admin unchangeddeployment.apps/cattle-cluster-agent configureddaemonset.apps/cattle-node-agent configured内网环境的k8s端 管控正常[root@localhost elfk]# kubectl get pods -ANAMESPACE NAME READY STATUS RESTARTS AGEcattle-prometheus exporter-kube-state-cluster-monitoring-dcf459bb8-t8qnj 1/1 Running 0 98mcattle-prometheus exporter-node-cluster-monitoring-9kdvj 1/1 Running 0 98mcattle-prometheus grafana-cluster-monitoring-698d79ff64-tv8hx 2/2 Running 0 98mcattle-prometheus prometheus-cluster-monitoring-0 5/5 Running 1 95mcattle-prometheus prometheus-operator-monitoring-operator-5f5d4c5987-8fjtq 1/1 Running 0 98mcattle-system cattle-cluster-agent-56c4d48669-dpftm 1/1 Running 0 73scattle-system cattle-node-agent-p8r5x 1/1 Running 0 69singress-nginx nginx-ingress-controller-74879f74c6-jdzx9 1/1 Running 0 25hkong ingress-kong-d7b5d68f4-j6tsx 2/2 Running 2 25hkube-system calico-kube-controllers-69cb4d4df7-447m5 1/1 Running 0 25hkube-system calico-node-7cb2w 1/1 Running 0 25hkube-system coredns-9d85f5447-x5l4c 1/1 Running 0 26hkube-system coredns-9d85f5447-zqgh7 1/1 Running 0 26hkube-system etcd-localhost 1/1 Running 0 26hkube-system kube-apiserver-localhost 1/1 Running 0 26hkube-system kube-controller-manager-localhost 1/1 Running 0 26hkube-system kube-proxy-gdtdt 1/1 Running 0 26hkube-system kube-scheduler-localhost 1/1 Running 0 26hkube-system metrics-server-6fcdc7bddc-ggsnm 1/1 Running 0 25h临时环境的rancher 失去对内网k8s的管控

内网环境的rancher接管对内网k8s的管控

此实验在临时环境同时跑两个rancher(端口差别)验证也是如此

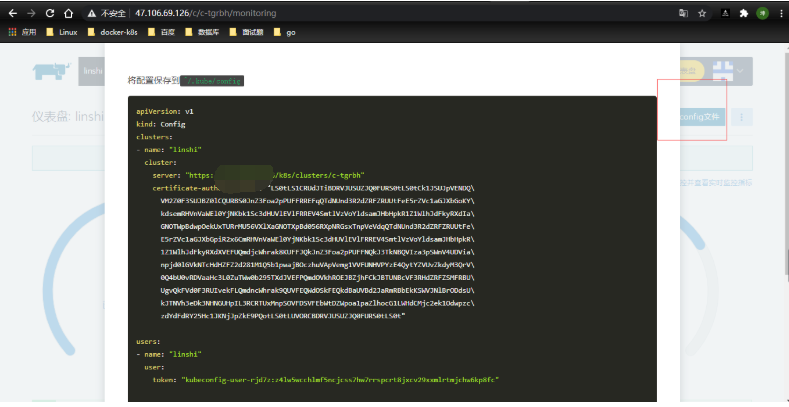

使用rancher 的kubeconfig管控k8s集群

[root@mobanji .kube]# vim configapiVersion: v1kind: Configclusters:- name: "linshi" cluster: server: "https://4xx.1xx.6x.1x6/k8s/clusters/c-tgrbh" certificate-authority-data: "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUJpVENDQ\ VM2Z0F3SUJBZ0lCQURBS0JnZ3Foa2pPUFFRREFqQTdNUnd3R2dZRFZRUUtFeE5rZVc1aGJXbGoKY\ kdsemRHVnVaWEl0YjNKbk1Sc3dHUVlEVlFRREV4SmtlVzVoYldsamJHbHpkR1Z1WlhJdFkyRXdIa\ GNOTWpBdwpOekUxTURrMU56VXlXaGNOTXpBd056RXpNRGsxTnpVeVdqQTdNUnd3R2dZRFZRUUtFe\ E5rZVc1aGJXbGpiR2x6CmRHVnVaWEl0YjNKbk1Sc3dHUVlEVlFRREV4SmtlVzVoYldsamJHbHpkR\ 1Z1WlhJdFkyRXdXVEFUQmdjcWhrak8KUFFJQkJnZ3Foa2pPUFFNQkJ3TkNBQVIza3pSWnV4UDVia\ npjd0lGVkNTcHdHZFZ2d281M1Q5b1pwajBOczhuVApVemg1VVFUNHVPYzE4QytYZVUvZkdyM3QrV\ 0Q4bU0vRDVaaHc3L0ZuTWw0b295TXdJVEFPQmdOVkhROEJBZjhFCkJBTUNBcVF3RHdZRFZSMFRBU\ UgvQkFVd0F3RUIvekFLQmdncWhrak9QUVFEQWdOSkFEQkdBaUVBd2JaRmRBbEkKSWVJNlBrODdsU\ kJTNVh3eDk3NHNGUHpIL3RCRTUxMnpSOVFDSVFEbWtDZWpoa1paZlhocG1LWHdCMjc2ek1Odwpzc\ zdYdFdRY25Hc1JKNjJpZkE9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0t"users:- name: "linshi" user: token: "kubeconfig-user-rjd7z:z4lw5wcchlmf5ncjcss7hw7rrspcrt8jxcv29x这说明使用rancher的kubeconfig可以给特定集群进行管控

现在换回原来的看是否可以管控

[root@mobanji .kube]# mv config.bak02 configmv:是否覆盖"config"? y[root@mobanji .kube]# kubectl get pods -ANAMESPACE NAME READY STATUS RESTARTS AGEarms-prom arms-prometheus-ack-arms-prometheus-7b4d578f86-ff824 1/1 Running 0 71marms-prom kube-state-metrics-864b9c5857-ljb6l 1/1 Running 0 71marms-prom node-exporter-6ttg5 2/2 Running 0 71marms-prom node-exporter-rz7rr 2/2 Running 0 71marms-prom node-exporter-t45zj 2/2 Running 0 71mcattle-system cattle-cluster-agent-57c67fb54c-6pr9q 1/1 Running 0 24mcattle-system cattle-node-agent-44d7g 1/1 Running 0 41mcattle-system cattle-node-agent-f2b62 0/1 Pending 0 24mcattle-system cattle-node-agent-qg86b 1/1 Running 0 41mkube-system ack-node-problem-detector-daemonset-2r9bg 1/1 Running 0 71mkube-system ack-node-problem-detector-daemonset-mjk4k 1/1 Running 0 71mkube-system ack-node-problem-detector-daemonset-t94p4 1/1 Running 0 71mkube-system ack-node-problem-detector-eventer-6cb57ffb68-gl5n5 1/1 Running 0 71mkube-system alibaba-log-controller-795d7486f8-jrcv2 1/1 Running 2 71mkube-system alicloud-application-controller-bc84954fb-z5dq9 1/1 Running 0 71mkube-system alicloud-monitor-controller-7c8c5485f4-xdrdd 1/1 Running 0 71mkube-system aliyun-acr-credential-helper-59b59477df-p7vxc 1/1 Running 0 71mkube-system coredns-79989b94b6-47vll 1/1 Running 0 71mkube-system coredns-79989b94b6-dqpjm 1/1 Running 0 71mkube-system csi-plugin-2kjc9 9/9 Running 0 71mkube-system csi-plugin-5lt7b 9/9 Running 0 71mkube-system csi-plugin-px52m 9/9 Running 0 71mkube-system csi-provisioner-56d7fb44b6-6czqx 8/8 Running 0 71mkube-system csi-provisioner-56d7fb44b6-jqsrt 8/8 Running 1 71mkube-system kube-eventer-init-mv2vj 0/1 Completed 0 71mkube-system kube-flannel-ds-4xbhr 1/1 Running 0 71mkube-system kube-flannel-ds-mfg7m 1/1 Running 0 71mkube-system kube-flannel-ds-wnrp2 1/1 Running 0 71mkube-system kube-proxy-worker-44tkt 1/1 Running 0 71mkube-system kube-proxy-worker-nw2g6 1/1 Running 0 71mkube-system kube-proxy-worker-s78vl 1/1 Running 0 71mkube-system logtail-ds-67lkm 1/1 Running 0 71mkube-system logtail-ds-68fdz 1/1 Running 0 71mkube-system logtail-ds-bkntm 1/1 Running 0 71mkube-system metrics-server-f88f9b8d8-l9zl4 1/1 Running 0 71mkube-system nginx-ingress-controller-758cdc676c-k8ffj 1/1 Running 0 71mkube-system nginx-ingress-controller-758cdc676c-pxzxd 1/1 Running 0 71m这证明rancher的kubeconfig并不影响k8s集群自身的config

遇到的问题

临时环境托管k8s无法拉取镜像

1.虚拟路由器

2.防火墙

临时环境没有公网ip 无法部署rancher

绑定eip

部署rancher临时环境k8s挂掉

在myaliyun部署rancher导致临时环境k8s集群的controller和scheduler挂掉

我观察到一个现象:在aliyun的ecs上部署k8s 然后在用:docker run -d --restart=unless-stopped \

> -p 1880:80 -p 1443:443 \

> rancher/rancher:latest 起一个rancher 这会导致k8s集群崩溃。如下所示:kube-system kube-controller-manager-zisefeizhu 0/1 Error 10 117s

kube-system kube-controller-manager-zisefeizhu 0/1 CrashLoopBackOff 10 118s

kube-system kube-scheduler-zisefeizhu 0/1 Error 9 3m59s

kube-system kube-scheduler-zisefeizhu 0/1 CrashLoopBackOff 9 4m3s

具体原因是:rancher 在试图控制我的k8s集群的controller 和 scheduler

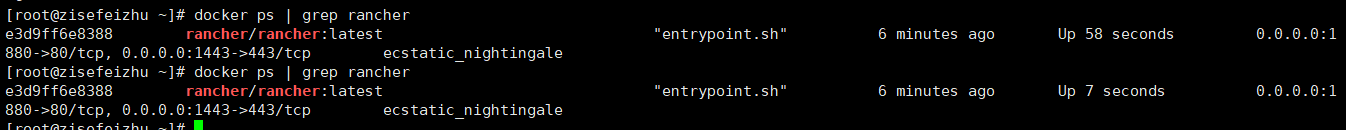

而且还有一个现像:docker 起的rancher 处于不断重启的过程

[rootazisefeizhu-J#docker ps |grep rancher

0.0.0.0:1

entrypoint.sh"

rancher/rancher:latest

3d9ff6e8388

880.2807P000

rootazisefeizhu-liadockorps

greprancher

0.0.0.0:1

e3d91f6e8388ncher/ranchert

Up75econds

minutes4go

entrypoint.sh

880-80/tcp0.0.0.0:1443-3443/tcp

cstaticnightingalo

这个现象在内网自建的k8s上没有的 但在阿里云的k8s上是出现的

资源不足导致竞争,而且凉掉

k8s多集群配置管理平台慧聪商务网、 商标抢注、 focalprice、 c-tick认证、 新手卖家如何做好亚马逊运营?这7大红线不要碰!、 震惊!Facebook员工因在亚马逊上留5星好评被开除!、 遵义旅游攻略大全、 遵义旅游攻略大全、 遵义旅游攻略大全、

No comments:

Post a Comment